728x90

반응형

자연어처리에서 토큰의 순서가 매우 중요합니다.

- 나는 집에 간다. (o)

- 나는 간다 집에 (x)

이러한 토큰의 순서를 고려하기 위해 RNN 형태의 모델이 개발되었습니다.

RNN의 의미와 구조

똑같은 weight를 통해 재귀적으로(Recurrent) 학습한다. = RNN(Recurrent Neural Network)

xt라는 input이 들어가게 되면 이전에 xt-1에서 학습된 A라는 weight를 통해 ht를 리턴하게 됩니다.

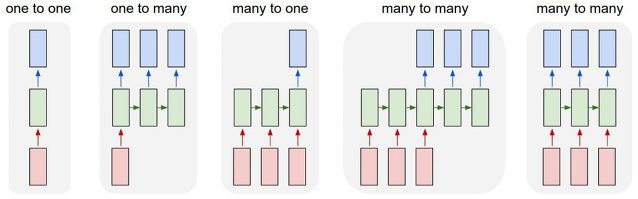

RNN의 여러 형태와 사용 분야

빨간박스는 인풋, 초록박스는 RNN Block, 파란박스는 y(정답) 혹은 y^(예측값) 아웃풋입니다.

one-to-many

사용분야 : image captioning ( input: image, output: sequence of words/tokens ex. <start> Giraffes standing, ,,, <end>)

many-to-one

- 감정분석(sentiment claasification) : 감정의 클래스 예측(very positive, positive, neutral..)

many-to-many (왼쪽)

- 전체 인풋을 하나로 요약해서(초록색 가운데 박스) 그걸 기반으로 아웃풋을 낸다.

- input과 아웃풋이 하나씩 대응되지 않는다.

기게번역 machine translation - NMT(encoder+decoder)

many-to-many (오른쪽)

- 매 timestamp마다 아웃풋을 낸다.

Named entity recognition(NER) 정보추출에 사용

example of NER: 장소, 기관

1. 제주와 서울은 비행기로 1시간이다. -장소

2. 서울시청은 오늘 문을 닫는다. -기관

반응형

'딥러닝 > Today I learned :' 카테고리의 다른 글

| end-to-end 모델이란 (0) | 2023.01.13 |

|---|---|

| 파이토치로 간단한 인공신경망 구현하기 (분류) (0) | 2022.12.29 |

| python pytorch 텐서 rank, un squeeze, view, 행렬곱 (0) | 2022.12.28 |

| 딥러닝의 모든 핵심 개념 정리2 (0) | 2022.12.27 |

| 딥러닝의 모든 핵심 개념 정리1 (0) | 2022.12.27 |