[[ 0 0 1 2] [ 0 0 0 3] [ 4 5 6 7] [ 0 8 9 10] [ 0 11 12 13] [ 0 0 0 14] [ 0 0 0 15] [ 0 0 16 17] [ 0 0 18 19] [ 0 0 0 20]]자연어 = 우리가 평소에 말하는 음성이나 텍스트

자연어 처리(Natural Language Processing, NLP) : 자연어를 컴퓨터가 인식하고 처리하는 것

- 텍스트 전처리 과정

토큰화(tokenization) : 입력된 텍스트를 잘게 나누는 과정

keras, text 모듈의 text_to_word_sequence() 함수 : 문장을 단어 단위로 나눔

from tensorflow.keras.preprocessing.text import text_to_word_sequence

text = ‘해보지 않으면 해낼 수 없다’

result = text_to_word_sequence(text)

print(result)[‘해보지’, ‘않으면’, ‘해낼’, ‘수’, ‘없다’]

먼저 텍스트의 각 단어를 나누어 토큰화합니다.

텍스트의 단어를 토큰화해야 딥러닝에서 인식됩니다.

토큰화 한 결과는 딥러닝에서 사용할 수 있습니다.

케라스의 Tokenizer() 함수 : 단어의 빈도 수 계산

from tensorflow.keras.preprocessing.text import Tokenizer

docs = [‘먼저 텍스트의 각 단어를 나누어 토큰화합니다.’, ‘텍스트의 단어로 토큰화해야 딥러닝에서 인식됩니다.’, ‘토큰화한 결과는 딥러닝에서 사용할 수 있습니다.’,]

#Tokenizer()를 이용해 전처리

token = Tokenizer() # 토큰화 함수 지정

token.fit_on_texts(docs) # 토큰화 함수에 문장 적용

print(token.word_counts) # 단어의 빈도 수를 계산한 결과 출력

#word_counts= 단어의 빈도 수를 계산해주는 함수OrderedDict([(‘먼저’, 1), (‘텍스트의’, 2), (‘각’, 1), (‘단어를’, 1), (‘나누어’, 1), (‘토큰화’, 3), (‘합니다’, 1), (‘단어로’, 1), (‘해야’, 1), (‘딥러닝에서’, 2), (‘인식됩니다’, 1), (‘한’, 1), (‘결과는’, 1), (‘사용’, 1), (‘할’, 1), (‘수’, 1), (‘있습니다’, 1)])

‘토큰화’가 3번, ‘텍스트의’와 ‘딥러닝에서’가 2번, 나머지가 1번씩 나오고 있다

순서를 기억하는 OrderedDict 클래스에 담겨 있는 형태로 출력

document_count() 함수 : 총 몇 개의 문장이 들어있는가?

print(token.document_count)실행결과 : 3

word_docs() 함수 : 각 단어들이 몇 개의 문장에 나오는가? (출력되는 순서는 랜덤)

print(token.word_docs){‘한’: 1, ‘먼저’: 1, ‘나누어’: 1, ‘해야’: 1, ‘토큰화’: 3, ‘결과는’: 1, ‘각’: 1, ‘단어를’: 1, ‘인식됩니다’: 1, ‘있습니다’: 1, ‘할’: 1, ‘단어로’: 1, ‘수’: 1, ‘합니다’: 1, ‘딥러닝에서’: 2, ‘사용’: 1, ‘텍스트의’: 2}

word_index() 함수 : 각 단어에 매겨진 인덱스 값

print(token.word_index){'딥러닝에서': 3, '단어를': 6, '결과는': 13, '수': 16, '한': 12, '인식됩니다': 11, '합니다': 8, '텍스트의': 2, '토큰화': 1, '할': 15, '각': 5, '있습니다': 17, '먼저': 4, '나누어': 7, '해야': 10, '사용': 14, '단어로': 9}

단어의 원-핫 인코딩

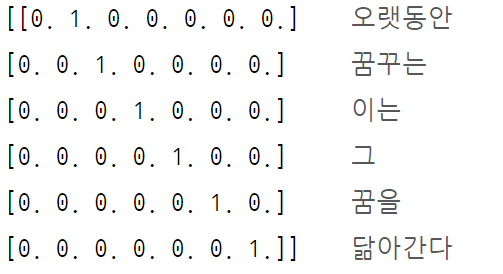

‘오랫동안 꿈꾸는 이는 그 꿈을 닮아간다’

원-핫 인코딩 = 각 단어를 모두 0으로 바꾸어 주고 원하는 단어만 1로 바꾸어 주는 것

이를 수행하기 위해 먼저 단어 수만큼 0으로 채워진 벡터 공간으로 바꾸기

파이썬 배열의 인덱스는 0부터 시작하므로, 맨 앞에 0이 추가됨

이제 각 단어가 배열 내에서 해당하는 위치를 1로 바꿔서 벡터화할 수 있다.

1. 토큰화 함수를 불러와 단어 단위로 토큰화하고 각 단어의 인덱스 값을 출력

from tensorflow.keras.preprocessing.text import Tokenizer

text=“오랫동안 꿈꾸는 이는 그 꿈을 닮아간다”

token = Tokenizer()

token.fit_on_texts([text])

print(token.word_index){‘꿈을’: 5, ‘꿈꾸는’: 2, ‘그’: 4, ‘닮아간다’: 6, ‘이는’: 3, ‘오랫동안’: 1}

2. 원-핫 인코딩

케라스에서 제공하는 Tokenizer의 texts_to_sequences() 함수 : 만들어진 토큰의 인덱스로만 채워진 새로운 배열 생성

x = token.texts_to_sequences([text])

print(x)[[1,2,3,4,5,6]]

to_categorical() 함수 - 1~6까지의 정수로 인덱스 되어 있는 것을 0과 1로만 이루어진 배열로 바꾸기, 원-핫 인코딩 진행

. 배열 맨 앞에 0이 추가되므로 단어 수보다 1이 더 많게 인덱스 숫자를 잡아 주는 것에 유의!

from keras.utils import to_categorical

# 인덱스 수에 하나를 추가해서 원-핫 인코딩 배열 만들기

word_size = len(t.word_index) +1

x = to_categorical(x, num_classes=word_size)

print(x)

원-핫 인코딩을 그대로 사용하면 벡터의 길이가 너무 길어진다는 단점( 예를 들어 1만 개의 단어 토큰으로 이루어진 말뭉치를 다룬다고 할 때, 이 데이터를 원-핫 인코딩으로 벡터화하면 9,999개의 0과 하나의 1로 이루어진 단어 벡터를 1만 개나 만들어야 한다.) 이러한 공간적 낭비를 해결하기 위해 등장한 것이 단어 임베딩(word embedding)이라는 방법

단어 임베딩은 주어진 배열을 정해진 길이로 압축

단어 임베딩으로 얻은 결과가 밀집된 정보를 가지고 있고 공간의 낭비가 적다

이런 결과가 가능한 이유는 각 단어 간의 유사도를 계산했기 때문

단어 간 유사도는 어떻게 계산하는 것일까?

오차 역전파

케라스에서 제공하는 Embedding()함수

from keras.layers import Embedding model = Sequential() model.add(Embedding(16,4)Embedding(16,4)가 의미하는 바는// ‘입력’과 ‘출력’의 크기// 입력될 총 단어 수는 16, 임베딩 후 출력되는 벡터 크기는 4

여기에 단어를 매번 얼마나 입력할지를 추가로 지정할 수 있다.

Embedding(16,4, input_length=2)라고 하면 총 입력되는 단어 수는 16개이지만 매번 2개씩만 넣겠다는 뜻

텍스트 감정을 예측하는 딥러닝 모델 -영화 리뷰를 딥러닝 모델로 학습해서, 각 리뷰가 긍정적인지 부정적인지 예측

1. 짧은 리뷰 10개를 불러와 각각 긍정이면 1이라는 클래스를, 부정적이면 0이라는 클래스로 지정

# 텍스트 리뷰 자료 지정

docs = [‘너무 재밌네요’,‘최고예요’,‘참 잘 만든 영화예요’,‘추천하고 싶은 영화입니다.’,‘한 번 더 보고싶네요’,‘글쎄요’,‘별로예요’,‘생각보다 지루하네요’,‘연기가 어색해요’,‘재미없어요’]

# 긍정 리뷰는 1, 부정 리뷰는 0으로 클래스 지정

class = array([1,1,1,1,1,0,0,0,0,0])

2. 토큰화 과정

Tokenizer() 함수의 fit_on_text: 각 단어를 하나의 토큰으로 변환

# 토큰화

token = Tokenizer()

token.fit_on_texts(docs)

print(token.word_index) # 토큰화 된 결과를 출력해 확인{'생각보다': 16, '만든': 6, '영화입니다': 10, '한 번': 11, '영화예요': 7, '싶은': 9, '보고싶네요': 13, '어색해요': 19, '재미없어요': 20, '더': 12, '추천하고': 8, '지루하네요': 17, '최고예요': 3, '잘': 5, '참': 4, '재밌네요': 2, '별로예요': 15, '글쎄요': 14, '연기가': 18, '너무': 1}.

3. 토큰에 지정된 인덱스로 새로운 배열을 생성

x = token.texts_to_sequences(docs)

print(x)[[1, 2], [3], [4, 5, 6, 7], [8, 9, 10], [11, 12, 13], [14], [15], [16, 17], [18, 19], [20]]

단어가 1부터 20까지의 숫자로 토큰화

그런데 입력된 리뷰 데이터의 토큰 수가 각각 다르다는 것에 유의하시기 바랍니다.

예를 들어 ‘최고예요’는 하나의 토큰 ([3])이지만 ‘참 잘 만든 영화예요’는 4개의 토큰([4, 5, 6, 7])을 가지고 있지요.

딥러닝 모델에 입력을 하려면 학습 데이터의 길이가 동일해야 합니다.=>패딩( padding)

pad_sequnce()

padded_x = pad_sequences(x, 4) # 서로 다른 길이의 데이터를 4로 맞추기

print(padded_x)[[ 0 0 1 2]

[ 0 0 0 3]

[ 4 5 6 7]

[ 0 8 9 10]

[ 0 11 12 13]

[ 0 0 0 14]

[ 0 0 0 15]

[ 0 0 16 17]

[ 0 0 18 19]

[ 0 0 0 20]]

4. 단어 임베딩을 포함, 딥러닝 모델을 만들고 결과를 출력

임베딩 함수에 필요한 세 가지 파라미터= ‘입력, 출력, 단어 수’

1. 총 몇 개의 단어 집합에서(입력),

2. 몇 개의 임베딩 결과를 사용할 것인지(출력),

3. 그리고 매번 입력될 단어 수는 몇 개로 할지(단어 수)

1 . word_size라는 변수를 만든 뒤, 길이를 세는 len() 함수를 이용해 word_index 값을 앞서 만든 변수에 대입합니다. 이때 전체 단어의 맨 앞에 0이 먼저 나와야 하므로 총 단어 수에 1을 더하는 것을 잊지 마시기 바랍니다.

word_size = len(token.word_index) +12. 이번 예제에서는 word_size만큼의 입력 값을 이용해 8개의 임베딩 결과를 만들겠습니다. 여기서 8이라는 숫자는 임의로 정한 것입니다. 데이터에 따라 적절한 값으로 바꿀 수 있습니다. 이때 만들어진 8개의 임베딩 결과는 우리 눈에 보이지 않습니다. 내부에서 계산하여 딥러닝의 레이어로 활용됩니다.

3. 패딩 과정을 거쳐 4개의 길이로 맞춰 주었으므로 4개의 단어가 들어가게 설정하면 임베딩 과정은 다음 한 줄로 표현됩니다.

Embedding(word_size, 8, input_length=4)모델 생성

# 단어 임베딩을 포함하여 딥러닝 모델을 만들고 결과를 출력

model = Sequential()

model.add(Embedding(word_size, 8, input_length=4))

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(padded_x, labels, epochs=20)

print("\n Accuracy: %.4f" % (model.evaluate(padded_x, labels)[1]))최적화 함수로 adam()을 사용하고 오차 함수로는 binary_crossentropy()를 사용했습니다. 30번 반복하고나서 정확도를 계산하여 출력하게 했습니다.

import numpy

import tensorflow as tf

from numpy import array

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense,Flatten,Embedding

# 텍스트 리뷰 자료 지정

docs = ['너무 재밌네요','최고예요','참 잘 만든 영화예요','추천하고 싶은 영화입니다.','한번 더 보고싶네요','글쎄요','별로예요','생각보다 지루하네요','연기가 어색해요','재미없어요']

# 긍정 리뷰는 1, 부정 리뷰는 0으로 클래스 지정

classes = array([1,1,1,1,1,0,0,0,0,0])

# 토큰화

token = Tokenizer()

token.fit_on_texts(docs)

print(token.word_index)

# 패딩, 서로 다른 길이의 데이터를 4로 맞춤

padded_x = pad_sequences(x, 4)

"\n패딩 결과\n", print(padded_x)

# 임베딩에 입력될 단어 수 지정

word_size = len(token.word_index)+1

# 단어 임베딩을 포함하여 딥러닝 모델을 만들고 결과 출력

model = Sequential()

model.add(Embedding(word_size, 8, input_length=4))

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(padded_x, labels, epochs=20)

print("\n Accuracy: %.4f" % (model.evaluate(padded_x, classes)[1]))실행 결과

Train on 10 samples

Epoch 1/20 10/10 [==============================] - 0s 7ms/sample - loss: 0.7047 - accuracy: 0.3000

Epoch 2/20 10/10 [==============================] - 0s 183us/sample - loss: 0.7027 - accuracy: 0.4000

(중략)

Epoch 20/20 10/10 [==============================] - 0s 150us/sample - loss: 0.6668 - accuracy: 0.9000 10/10 [==============================] - 0s 2ms/sample - loss: 0.6648 - accuracy: 0.9000

학습 후 10개의 리뷰 샘플 중 9개의 긍정 또는 부정을 맞혔음

'딥러닝 > Today I learned :' 카테고리의 다른 글

| [딥러닝] itertools 라이브러리 (0) | 2021.04.01 |

|---|---|

| [딥러닝] Numpy 라이브러리 (0) | 2021.03.31 |

| [딥러닝] 이미지 인식 , 컨볼루션 신경망(CNN) (0) | 2021.03.27 |

| [딥러닝] 선형 회귀 적용하기 (0) | 2021.03.26 |

| [딥러닝] 와인의 종류 예측하기 (0) | 2021.03.25 |